Kaggle房价预测实践系列3-特征工程

完成了前述的数据清理工作,有时也叫做数据清洗。接下来,我们需要进行特征工程的另一个重要环节构造特征和特征变换阶段。实践中,对特征的构造往往是基于对业务的了解甚至是直觉、常识等来进行的。在这里,我们会对一些与房屋关系密切的特征来处理,比如说楼层总面积,每个房间的浴室和走廊面积等。

构造特征

all_features['BsmtFinType1_Unf'] = 1*(all_features['BsmtFinType1'] == 'Unf')

all_features['HasWoodDeck'] = (all_features['WoodDeckSF'] == 0) * 1

all_features['HasOpenPorch'] = (all_features['OpenPorchSF'] == 0) * 1

all_features['HasEnclosedPorch'] = (all_features['EnclosedPorch'] == 0) * 1

all_features['Has3SsnPorch'] = (all_features['3SsnPorch'] == 0) * 1

all_features['HasScreenPorch'] = (all_features['ScreenPorch'] == 0) * 1

all_features['YearsSinceRemodel'] = all_features['YrSold'].astype(int) - all_features['YearRemodAdd'].astype(int)

all_features['Total_Home_Quality'] = all_features['OverallQual'] + all_features['OverallCond']

all_features = all_features.drop(['Utilities', 'Street', 'PoolQC',], axis=1)

all_features['TotalSF'] = all_features['TotalBsmtSF'] + all_features['1stFlrSF'] + all_features['2ndFlrSF']

all_features['YrBltAndRemod'] = all_features['YearBuilt'] + all_features['YearRemodAdd']

all_features['Total_sqr_footage'] = (all_features['BsmtFinSF1'] + all_features['BsmtFinSF2'] +

all_features['1stFlrSF'] + all_features['2ndFlrSF'])

all_features['Total_Bathrooms'] = (all_features['FullBath'] + (0.5 * all_features['HalfBath']) +

all_features['BsmtFullBath'] + (0.5 * all_features['BsmtHalfBath']))

all_features['Total_porch_sf'] = (all_features['OpenPorchSF'] + all_features['3SsnPorch'] +

all_features['EnclosedPorch'] + all_features['ScreenPorch'] +

all_features['WoodDeckSF'])

all_features['TotalBsmtSF'] = all_features['TotalBsmtSF'].apply(lambda x: np.exp(6) if x <= 0.0 else x)

all_features['2ndFlrSF'] = all_features['2ndFlrSF'].apply(lambda x: np.exp(6.5) if x <= 0.0 else x)

all_features['GarageArea'] = all_features['GarageArea'].apply(lambda x: np.exp(6) if x <= 0.0 else x)

all_features['GarageCars'] = all_features['GarageCars'].apply(lambda x: 0 if x <= 0.0 else x)

all_features['LotFrontage'] = all_features['LotFrontage'].apply(lambda x: np.exp(4.2) if x <= 0.0 else x)

all_features['MasVnrArea'] = all_features['MasVnrArea'].apply(lambda x: np.exp(4) if x <= 0.0 else x)

all_features['BsmtFinSF1'] = all_features['BsmtFinSF1'].apply(lambda x: np.exp(6.5) if x <= 0.0 else x)

all_features['haspool'] = all_features['PoolArea'].apply(lambda x: 1 if x > 0 else 0)

all_features['has2ndfloor'] = all_features['2ndFlrSF'].apply(lambda x: 1 if x > 0 else 0)

all_features['hasgarage'] = all_features['GarageArea'].apply(lambda x: 1 if x > 0 else 0)

all_features['hasbsmt'] = all_features['TotalBsmtSF'].apply(lambda x: 1 if x > 0 else 0)

all_features['hasfireplace'] = all_features['Fireplaces'].apply(lambda x: 1 if x > 0 else 0)

这部分的工作并不复杂,一般来说简单的四则运算和 if 条件判断就可以全部覆盖。在一些涉及到权重组合的新特征上,对权重的选择往往会从一半开始,二分之一位,四分之一位,四分之三位这些显著的位置往往是灵感的起源。

特征变换

为什么要做特征变换呢?这是因为我们能得到的数据往往并不丰富,数据欠缺导致的训练不足的影响往往更大,因此我们希望通过各种方式来丰富我们的数据。特征变换就是其中之一,这里的变换可以同比于我们在观察一张图片时执行的放大和缩小等操作,数据在必要的时候,也需要这种放大和缩小,以便于研究者可以从宏观调控,也可以从微观布局。现在已经有了很多的自动的特征变换工具,比如FeatureTools。我们这里因为规模的原因,暂时没有用到自动特征的工具。而是手动将特征变换的过程拆解执行。

- 首先是取对数(log)

def logs(res, ls):

m = res.shape[1]

for l in ls:

res = res.assign(newcol=pd.Series(np.log(1.01+res[l])).values)

res.columns.values[m] = l + '_log'

m += 1

return res

log_features = ['LotFrontage','LotArea','MasVnrArea','BsmtFinSF1','BsmtFinSF2','BsmtUnfSF',

'TotalBsmtSF','1stFlrSF','2ndFlrSF','LowQualFinSF','GrLivArea',

'BsmtFullBath','BsmtHalfBath','FullBath','HalfBath','BedroomAbvGr','KitchenAbvGr',

'TotRmsAbvGrd','Fireplaces','GarageCars','GarageArea','WoodDeckSF','OpenPorchSF',

'EnclosedPorch','3SsnPorch','ScreenPorch','PoolArea','MiscVal','YearRemodAdd','TotalSF']

all_features = logs(all_features, log_features)

取log的原因是为了让训练特征更趋于标准的正态分布,这一点与前面预测属性执行取对数操作的原因一致。

- 求平方 求平方的操作,可以对大的数据进行放大,小的数据进行缩小。这样由于大的数据和小的数据的差值被进一步扩大,那么数据所能体现出来的特征也将更加明显。

def squares(res, ls):

m = res.shape[1]

for l in ls:

res = res.assign(newcol=pd.Series(res[l]*res[l]).values)

res.columns.values[m] = l + '_sq'

m += 1

return res

squared_features = ['YearRemodAdd', 'LotFrontage_log',

'TotalBsmtSF_log', '1stFlrSF_log', '2ndFlrSF_log', 'GrLivArea_log',

'GarageCars_log', 'GarageArea_log']

all_features = squares(all_features, squared_features)

- 特征工程收尾工作

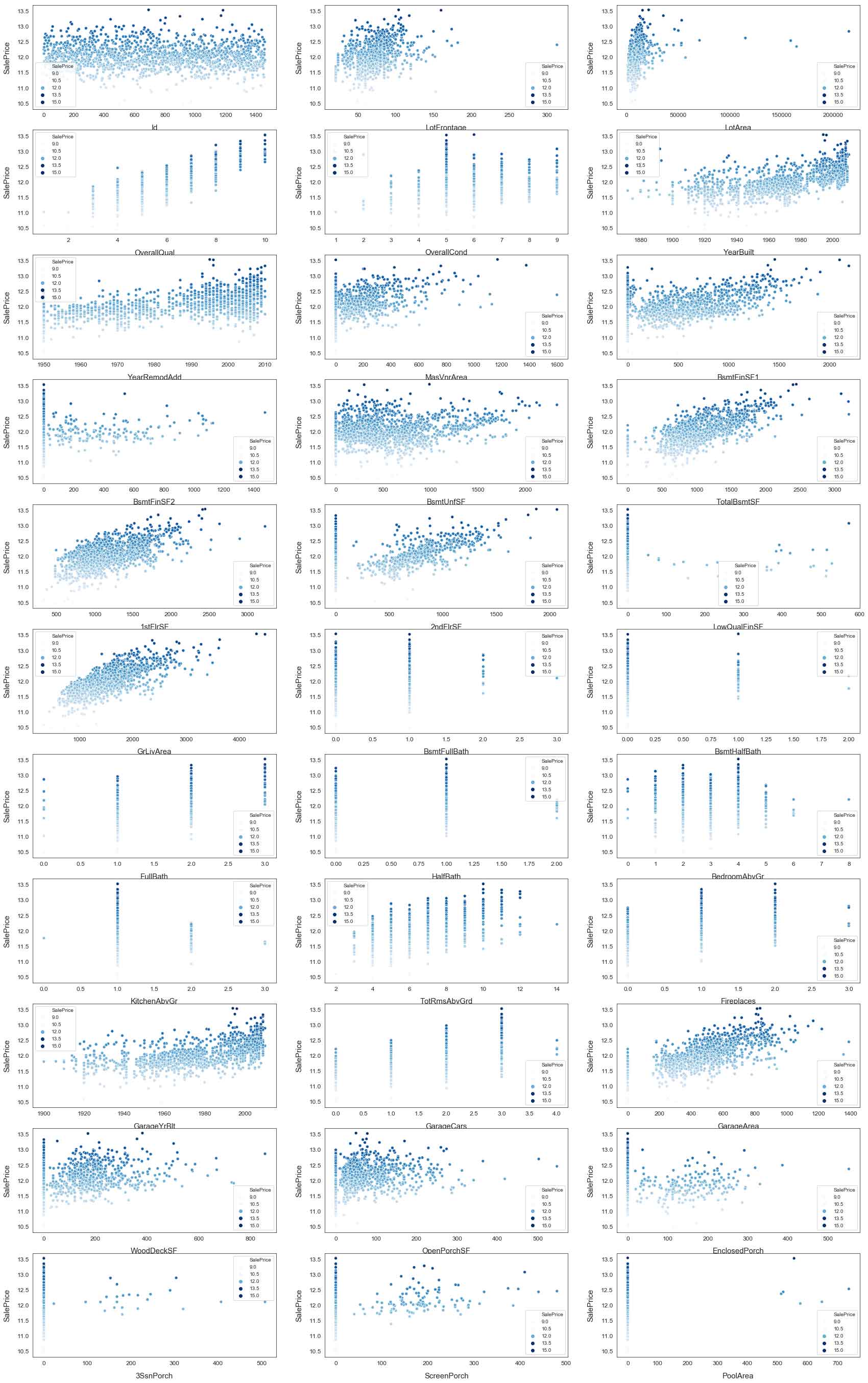

此时我们需要再次对数据进行可视化展示,在确定没有其他问题了之后,

- 如果所选择的模型更利于处理数值型的特征,那么,我们需要将字符型特征转换为数值型。

- 处理结果中如果存在重复的特征,考虑将其删除。

- 将处理好的数据重新构建新的训练集和测试集。

numeric_dtypes = ['int16', 'int32', 'int64', 'float16', 'float32', 'float64']

numeric = []

for i in X.columns:

if X[i].dtype in numeric_dtypes:

if i in ['TotalSF', 'Total_Bathrooms','Total_porch_sf','haspool','hasgarage','hasbsmt','hasfireplace']:

pass

else:

numeric.append(i)

# visualising some more outliers in the data values

fig, axs = plt.subplots(ncols=2, nrows=0, figsize=(12, 150))

plt.subplots_adjust(right=2)

plt.subplots_adjust(top=2)

sns.color_palette("husl", 8)

for i, feature in enumerate(list(X[numeric]), 1):

if(feature=='MiscVal'):

break

plt.subplot(len(list(numeric)), 3, i)

sns.scatterplot(x=feature, y='SalePrice', hue='SalePrice', palette='Blues', data=train)

plt.xlabel('{}'.format(feature), size=15,labelpad=12.5)

plt.ylabel('SalePrice', size=15, labelpad=12.5)

for j in range(2):

plt.tick_params(axis='x', labelsize=12)

plt.tick_params(axis='y', labelsize=12)

plt.legend(loc='best', prop={'size': 10})

plt.show()

到这里,是真正的特征工程结束了,下个阶段,要正式进入到模型的训练阶段了。

PREVIOUSKaggle房价预测实践系列4-模型训练